Issue #1 – From Pilots to ROI: Making AI Projects Succeed

As the inaugural issue, this edition carries my genuine excitement about contributing meaningful value to the incredible work you already do.

Whether you’re a CEO preparing for your next board conversation on how to keep up with “AI” –whatever that really means for your organization 😊– or an IT executive deciding which business function to prioritize to deliver measurable impact, one reality stands clear: powerful LLMs have already reached billions of devices worldwide.

These models are improving daily and delivering remarkable results. Every interaction with them –whether out of curiosity or habit– builds subtle yet growing pressure on every technology leader to do something with AI. For anyone leading a tech-enabled organization (which, let’s face it, covers most of today’s market cap), this has become a race to demonstrate execution rather than enable genuine experimentation.

This dynamic often leads to what I call the “AI FOMO loop”: hurried deployments, followed by recalibrations, re-scopings, and the occasional press release to appease stakeholders –all under mounting expectations from investors eager for one thing: ROI.

Real numbers. Real impact.

Yet, very few companies have reached that stage of clarity. This isn’t just my opinion –it’s based on dozens of conversations with executives over the past few months and is echoed in the latest MIT and S&P Global reports (and more recommended reads below).

Fortunately, my experience at Teammates.ai and with customers who’ve successfully unlocked tangible results has surfaced some consistent learnings I’ll be sharing in future issues.

Now, while the market noise can feel deafening, don’t mistake it for chaos. As someone who’s lived through more than two decades of tech hype cycles, I can confidently say: this time is different.

The leap in capability –and the resulting economic transformation– are more profound than anything we’ve seen before. New AI giants will emerge overnight, and others will vanish just as fast. But one thing is certain: the underlying infrastructure being built is irreversible and will leave an indelible mark on how we live and work. Whether you believe AI is overhyped or underhyped, the noise is unprecedented. For executives like you, the real challenge is cutting through it to see what truly drives ROI.

That’s precisely what this newsletter aims to help you do - welcome to Noise Free and thank you for subscribing!

P.S. Since this inaugural post is a bit longer than future posts to expect, this email may be truncated by your email provider, you can access the full post and the downloadable boardroom brief by clicking on the link below to get directed to the entire post.

Noise Free TL;DR

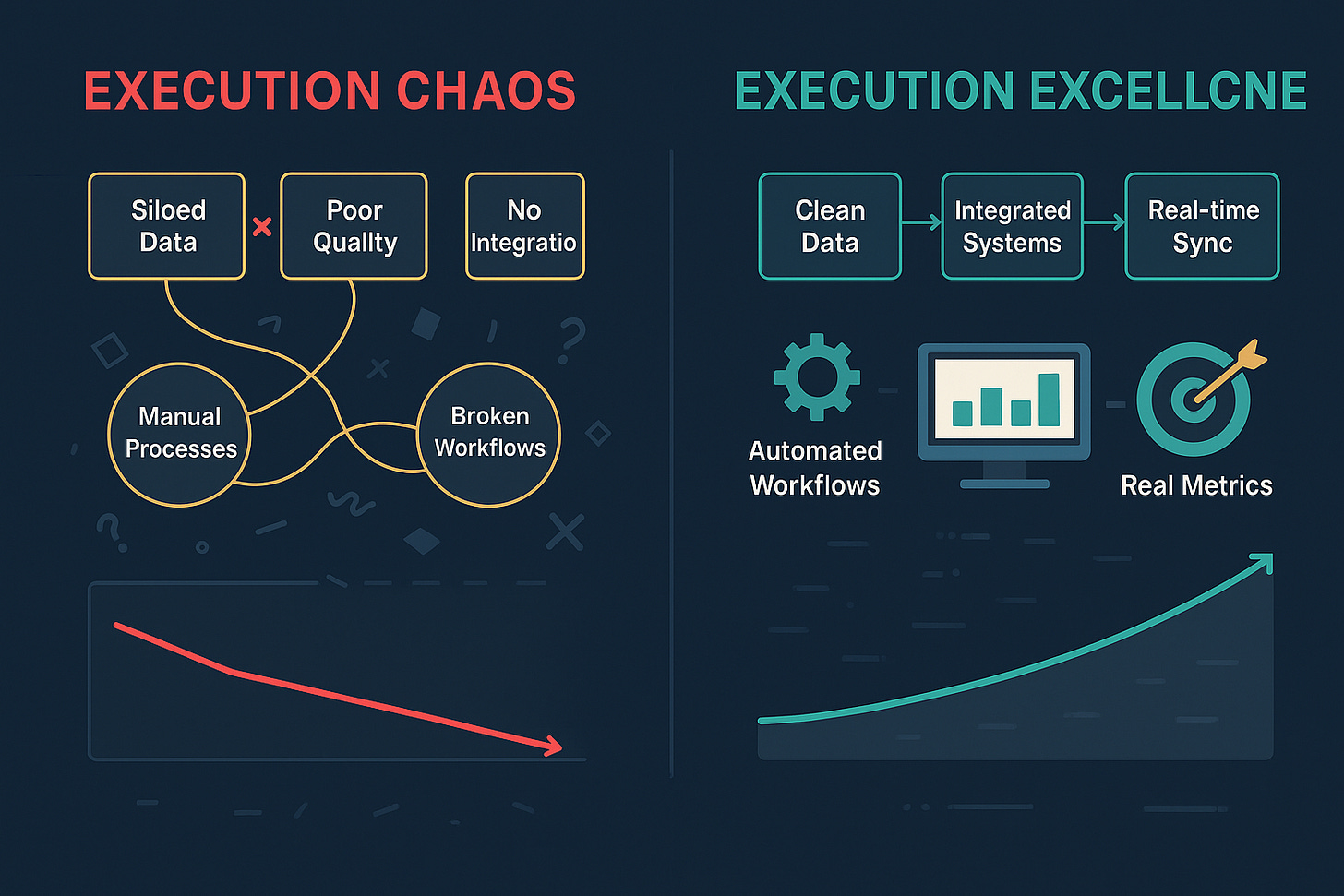

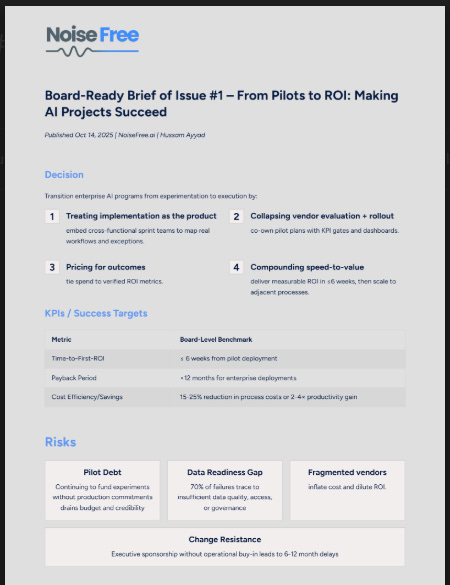

AI pilots fail because of execution, not technology. Research shows that 95 % of AI pilots never deliver ROI and 42 % are abandoned before they reach production. The culprits are neglected workflows, data quality, governance and change management.

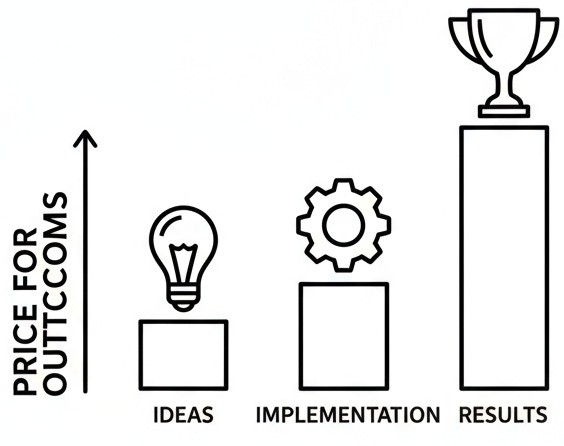

Integrate deeply and price for outcomes. Treat implementation as the product: map real processes, handle exceptions and embed AI into existing systems. Use collaborative pilots with data‑readiness gates and shared dashboards, and tie pricing to verified business impact (e.g., handle time or first‑contact resolution).

Start small and scale quickly. Launch a high‑volume, low‑risk use case within 4–6 weeks; validate the ROI, then replicate to adjacent processes using reusable prompts, policies and dashboards.

From Pilots to ROI: Making AI Projects Succeed – Lead Essay

Across enterprises worldwide, AI pilots abound –but too few cross the chasm to measurable ROI. MIT’s “GenAI Divide: State of AI in Business 2025” studied 150 executive interviews and 300 AI deployments and found that about 95 % of AI pilots fail to deliver any financial return, with only about 5 % achieving rapid revenue acceleration . This echoes other surveys: Constellation Research reported that 42 % of enterprises have deployed AI without seeing any ROI, while IDC found that 88 % of AI pilots never make it to production . The problem isn’t ambition or model quality; it’s execution. In a world where models and APIs are increasingly commoditized, the durable advantage comes from how well you integrate AI into the business: data pipelines, exception handling, governance and frontline adoption.

In this week’s issue, I draw on first-hand experiences I’ve witnessed first-hand from positive ROI deployments we carried out with Teammates.ai. In the absence of some of our soon-to-be-published official case studies, company names and industries shall continue to be anonymized.

What follows is a practical roadmap for leaders who want outcomes, not experiments. I deliberately avoided using jargon such as “blueprint” or “playbook” as there is no such thing, especially when factoring for the uniqueness of every business and its management dynamics.

A roadmap to delivering success with AI projects:

1) Treat Implementation as the Product

Don’t let a demo define success. Start every initiative with a workflow shadow sprint: embed a small team with end users for three to five days to document work as done –not the tidy SOP on paper. While SOPs are great starting resources to leverage when planning for and scoping such projects, from our experience, shadowing users unveils a lot more than what your business has historically documented. It helps you uncover patches and temporary fixes that you placed in the past, and that often means a new perspective on existing offerings to your customers. Effectively, taking this approach will help you produce three artifacts:

Work map: The real task graph –inputs, systems touched (e.g., Oracle/SAP cores), and handoffs across departments.

Exception catalog: The 10–20 edge cases that break brittle automations (policy checks, missing fields, approvals).

Control plan: Where humans stay in the loop, rollback paths, audit logs, and data residency constraints.

Important note: Data hygiene & annotation: Clean, well‑annotated data is vital: messy data derails AI projects and yields bad predictions. Andrew Ng notes that most ML work is data prep, so invest early in data organization and governance

A thin policy‑ops layer (rules engine plus guardrails) lets the same AI capability be tuned per market without rewrites. Use a simple maturity model as you iterate: Level 1 = assistive scripts, Level 2 = embedded in workflow with humans‑in‑the‑loop, Level 3 = tightly coupled to systems of record with telemetry. Moving from Level 1 to Level 2 is where most ROI shows up; Level 3 is where it sticks.

Publicly available case studies illustrate what’s possible when execution is disciplined. BMW integrated AI‑powered computer vision on its assembly lines; factories reported up to a 60 % reduction in defects, and by using no‑code tools and synthetic data the company cut the time needed to implement new quality checks by two‑thirds. Walmart optimized truck routing and load planning with AI, saving about $75 million in a single fiscal year and cutting 72 million pounds of CO₂ emissions . These successes emerged because teams treated implementation as the product, invested in data and process mapping, and focused on measurable outcomes.

From another perspective, if you’ve recently hired a “consultant” or vendor or tech provider who promised you the world, but has not yet shown you any of the above elements, then I strongly urge you to question their approach and take such as a flag to watch.

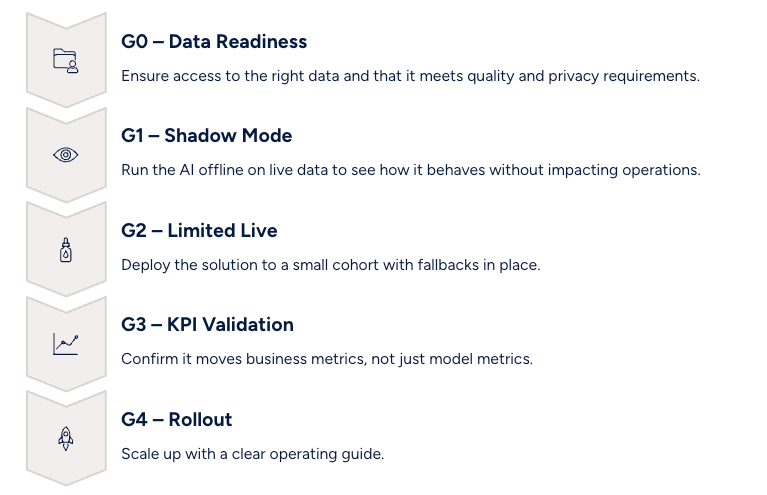

2) Collapse Vendor Evaluation and Rollout

You can’t honestly judge an AI tool without seeing it operate on your own data and workflows. Rather than accepting a vendor’s pitch and then signing a contract, insist on a collaborative pilot design document that you and your vendor co‑create before any deal is finalized. That document should spell out:

Milestone gates:

Leadership: assign a named executive sponsor on your side and require the vendor to do the same; set up weekly 30‑minute KPI check‑ins.

Visibility: demand shared dashboards (no fancy spaceship-dashboard like dashboards, please! Only 3 to 4 numbers to consistently follow) so you can monitor deflection rates, handle time, error classes, and override rates. This transparency helps you and the vendor stay aligned and catch issues early.

The MIT report notes that purchasing specialized AI tools succeeds about 67 % of the time, while internal builds succeed only around one‑third as often . Yet most enterprises misallocate resources: more than half of generative‑AI budgets are devoted to sales and marketing tools, but MIT found the biggest ROI in back‑office automation –eliminating business process outsourcing, cutting external agency costs and streamlining operations. A rigorous pilot plan with shared ownership shortens decision cycles and builds trust through evidence, not promises.

3) Price for Outcomes

Pricing should reinforce the behavior you want: business impact. A hybrid model works well –a base subscription plus a performance component tied to verified outcomes. Make it auditable:

Outcome SLA: Define the KPI (e.g., reduce average handle time by 15 %, increase first‑contact resolution by 8 %, cut back‑office cycle time by 25 %).

Verification method: Data sources, baseline period, seasonality adjustments, confidence bands.

Guardrails: Caps or floors to prevent outlier months from skewing incentives.

Change windows: Only count results after change‑freeze dates to avoid moving‑baseline games.

Align incentives so both parties obsess over the same scoreboard. The MIT study documented cases where back‑office automation delivered $2 million to $10 million in annual savings and 30 % reductions in external agency spend –clear evidence that outcome‑based pricing can unlock shared value.

4) Compound Speed‑to‑Value – Cannot be emphasized enough

Credibility compounds. Start with one micro‑use case you can ship in four to six weeks and measure end‑to‑end: contact‑center triage, claims intake classification, invoice extraction, KYC document QA. Choose with a Value Beachhead Test:

High volume (daily repetitions).

Clear KPI (time, cost, accuracy, Net Promoter Score).

Data availability (no heroic integrations).

Low blast radius (easy rollback).

Ship, measure the delta and then replicate to adjacencies (e.g., from triage → suggested responses → QA summarization). Standardize what worked into a reuse kit: prompts/policies, exception patterns, dashboards and change‑management scripts. The second and third wins will arrive faster than the first because the organization now trusts the method.

Success examples reinforce this approach. JPMorgan’s Contract Intelligence system (COIN) automates review of complex loan agreements; it now performs the equivalent of 360,000 staff hours annually, freeing lawyers to focus on higher‑value tasks . CarMax partnered with Microsoft’s Azure‑OpenAI service to summarize over 100,000 customer reviews into 5,000 highlights, accomplishing in months what would have taken 11 years manually. Shell uses AI to monitor more than 10,000 assets, process 20 billion sensor readings each week, and run 11,000 models generating 15 million predictions daily, enabling predictive maintenance that reduces downtime. These use cases started small, delivered clear wins and scaled.

What “Good” Looks Like 👍

Language and culture flexibility: Solutions must handle multi‑language inputs and adapt to local context.

Data residency and security: Offer flexible hosting options (cloud, private VPC, on‑premises) without sacrificing iteration speed.

Legacy‑aware integration: Provide adapters for ERP systems (Oracle, SAP), CRM or ITSM platforms; prefer event‑driven architectures.

Front‑line adoption: Identify champions in the call center, branch or back office; establish clear override/feedback channels.

Auditability: Maintain policy logs, monitor red‑flag events and conduct regular reviews with risk/compliance teams.

A Simple Operating Rhythm

Monday: KPI readout (business + risk); decide one knob to turn.

Mid‑week: Test in shadow or limited live; collect exception patterns.

Friday: Publish “So what” notes: what moved, what broke, what we’ll try next week.

Quarterly: Graduate winners to Level 3 (system‑of‑record integration) or retire politely.

Bottom Line

The winners won’t be the loudest about models; they’ll be the quietest about operational integration. When 95 % of AI pilots fail to deliver ROI and 42 % of enterprises abandon most of their AI projects, excellence in execution becomes the true differentiator. Treat implementation as the product, collapse the vendor evaluation and rollout, pay for outcomes, and compound quick wins. Do so and you’ll move beyond pilot purgatory –delivering ROI your board can feel, not just slides they can read.

Noise Free Signal:

Time-to-First-ROI

Benchmark: High-performing enterprises deliver measurable ROI in ≤ 6 weeks from pilot kickoff.

Signal: Treat implementation as the product. The faster you close the feedback loop, the more political capital and budget momentum you earn.

BONUS: Outcome-Linked Spend

Benchmark: Less than 30 % of enterprise AI budgets are tied to verified business metrics (MIT 2025).

Signal: Budget alignment predicts ROI. The rest is noise.

Noise Free Intel (4 Worthy Links + Why It Matters)

Microsoft: Enterprise AI maturity in five steps: Our guide for IT leaders – Practical maturity framework showing how to evolve from pilot to scalable AI capability with governance, data, and change levers. The enterprise world is already moving to multiple agents

The $4.6T Services-as-Software Opportunity (Foundation Capital) — Implementation is the moat; outcomes beat features. You’re not alone, enterprise deployments are almost always unique

McKinsey: GenAI in GCC — 2024 Report Card — Adoption is high, impact is lagging. Lessons from the winners (a lot has changed since Nov 2024, but report card is still relevant)

BCG GCC AI Pulse — Readiness gaps and where to invest first.

Noise Free Q&A

This week’s prompt:

“Which AI pilot did you scale, what KPI moved, and what governance made it stick?”

Reply directly to me with your answers, and with your permission, we’ll possibly feature your answer to the next issue.

Board-Ready Brief (1-Pager)

You can download here (or click on the image below):

Yes - you’ve earned it by reading to the end!

💬 Reply with a challenge you’re facing. Prefer a private briefing? Email me to book one.