2026 AI Reality: 5 Uncomfortable Truths Your Vendor Doesn't Want You to Believe

Five predictions for enterprise AI in 2026:

As we close out 2025, the AI landscape looks nothing like it did twelve months ago. Models that were “state of the art” in January are now deprecated. Startups that raised at billion-dollar valuations got absorbed in 10-day deals. And the debate about whether AI would transform enterprise work has quietly shifted to who will control that transformation.

Here are five predictions for where enterprise AI is heading in 2026, and what they mean for your strategy.

Noise Free TL;DR

5 predictions (and uncomfortable truths) for enterprise AI in 2026:

(1) “Hackquisitions” become the default M&A playbook as giants dodge regulators –your startup vendor may disappear overnight. (2) Inference costs will dominate your AI budget as production scales. (3) The browser becomes the default AI interface whether you planned for it or not. (4) Agentic AI creates new enterprise risk categories requiring governance frameworks. (5) Build vs. buy is settled; 76% of AI use cases are now purchased, not built.

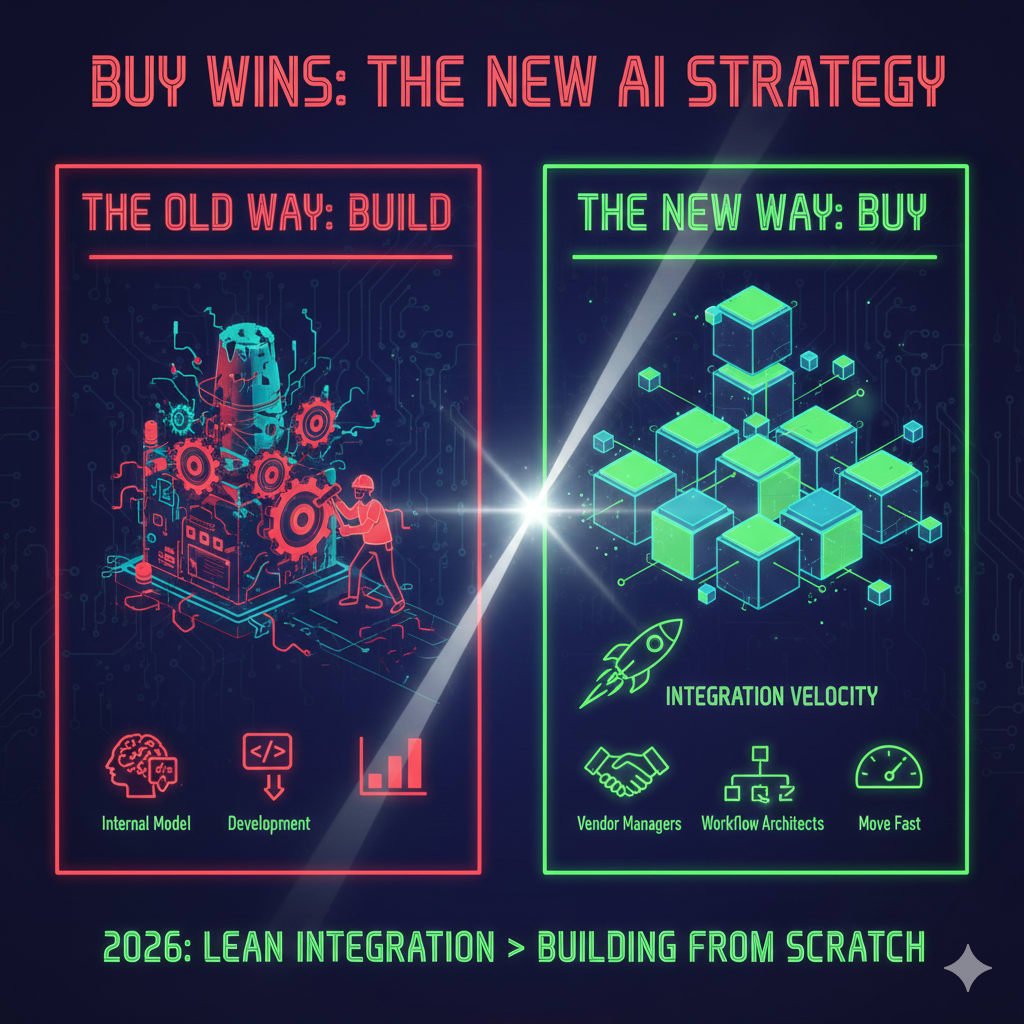

The lead essay goes deep on #5: the companies winning aren’t building AI –they’re assembling it. Your 2026 AI team mandate should shift from model development to integration velocity. The talent that matters now is the talent that moves fast with vendor tools, not the talent rebuilding from scratch.

Only cost is your attention for the read. That said, I promise to make it worthwhile

The "Build vs. Buy" Debate Is Over – ‘Buy’ Won - Lead Essay

For years, the conventional wisdom was that serious enterprises would build their own AI. Bloomberg trained BloombergGPT. Walmart built Wallaby. Teams were confident that with the right data, domain expertise, and engineering talent, they could own their AI destiny.

That era is over.

Menlo Ventures just released their State of Generative AI report. The headline number: enterprises spent $37 billion on generative AI in 2025; a 3.2x increase from $11.5 billion in 2024. But the more important number is buried in the methodology: 76% of AI use cases are now purchased, not built. Last year, that split was 53% bought, 47% built.

In twelve months, the enterprise AI market decisively shifted from “we’ll figure it out ourselves” to “we’ll buy the best and integrate fast.”

The Evidence Is Everywhere

Look at what happened in December alone.

Accenture announced it’s training 30,000 professionals on Claude as part of a new Anthropic partnership. The same week, they announced tens of thousands more would be equipped with ChatGPT Enterprise through an OpenAI collaboration. These aren’t pilot programs. They’re operating model transformations –betting that the future of consulting is vendor orchestration, not proprietary model development.

OpenAI released data showing ChatGPT Enterprise messages grew 8x year-over-year. Usage of Custom GPTs –where companies codify institutional knowledge into assistants– jumped 19x. BBVA alone runs over 4,000 custom GPTs. That’s not experimentation. That’s integration at scale.

Meta just paid $2 billion for Manus, an AI agent startup that went from $0 to $100M ARR in eight months. Why build agentic capabilities in-house when you can acquire a team that’s already proven product-market fit, and absorb them in ten days?

The pattern is consistent: the companies moving fastest aren’t building AI. They’re buying it, integrating it, and moving on to the next problem.

Why “Build” Lost

Three forces killed the build-your-own thesis:

1. Model improvement velocity outpaced internal development cycles.

GPT-5 launched in August. GPT-5.1 in November. GPT-5.2 in December. Three major model upgrades in four months. If your internal team spent 2025 building a custom model, it was obsolete before it shipped. The foundation model providers are iterating faster than any enterprise can match.

2. The talent math stopped working.

The best AI researchers want to work at frontier labs, not enterprise IT departments. Accenture’s 30,000-person Claude training bet is an implicit admission: it’s easier to train your existing workforce on vendor tools than to recruit AI specialists who’d rather be at Anthropic or OpenAI.

3. Integration became the bottleneck, not capability.

The hard part of enterprise AI isn’t getting a model to generate text. It’s connecting that model to your ERP, your CRM, your approval workflows, your compliance requirements. That integration work is the same whether you build or buy. However, if you buy, you start integrating immediately instead of spending 18 months on model development first.

What This Means for Your AI Team

If your AI strategy still centres on building proprietary models, it’s time for an honest reassessment.

The enterprises winning in 2026 won’t have the best models. They’ll have the best integration velocity –the ability to adopt new AI capabilities faster than competitors, plug them into existing workflows, and extract value before the next model generation makes the current one obsolete.

This requires a different org design. The 2024 AI team was researchers and ML engineers trying to build differentiated models. The 2026 AI team is integration specialists, vendor managers, and workflow architects who can:

Evaluate new model releases in days, not months

Maintain abstraction layers that allow model swaps without rebuilding applications (or even better, procure LLM-independent enterprise-grade applications and adaptable systems).

Negotiate contracts that anticipate vendor consolidation

Train business users on new capabilities as they ship

The job isn’t to build AI. The job is to be the fastest at making AI useful.

The Exception That Proves the Rule

There’s one category where building still makes sense: proprietary data moats.

If your competitive advantage is a dataset no one else has –and that dataset requires custom model architecture to exploit– building may still be defensible. But even here, the trend is toward fine-tuning vendor models on proprietary data rather than training from scratch.

For everyone else, the math is clear. The $37 billion enterprises spent on AI in 2025 wasn’t R&D. It was procurement. And the 76% who bought rather than built are the ones shipping production use cases while the builders are still tuning hyperparameters.

The Uncomfortable Implication

Here’s what nobody wants to say out loud: if “buy” won, then your internal AI team’s mandate needs to change…. or, shrink…

The companies that spent 2025 building AI centers of excellence focused on model development are sitting on expensive teams optimized for the wrong problem. The companies that built lean integration squads are already on their third or fourth production deployment.

This doesn’t mean AI talent is worthless. It means the valuable AI talent in 2026 is the talent that can move fast with vendor tools, not the talent that wants to rebuild everything from scratch.

Bottom Line

The “build vs. buy” debate consumed enormous energy over the past three years. It’s settled now. Buy won –not because buying is philosophically superior, but because the market moved too fast for internal builds to keep up.

The 2026 question isn’t whether to build or buy. It’s how fast you can integrate what you’ve bought –and whether your organization is structured to keep up with a market where today’s state-of-the-art is next quarter’s legacy system.

The noise was about building. The signal is about assembling.

Stay noise free.

New Year Bonus: Predictions #1 – 4

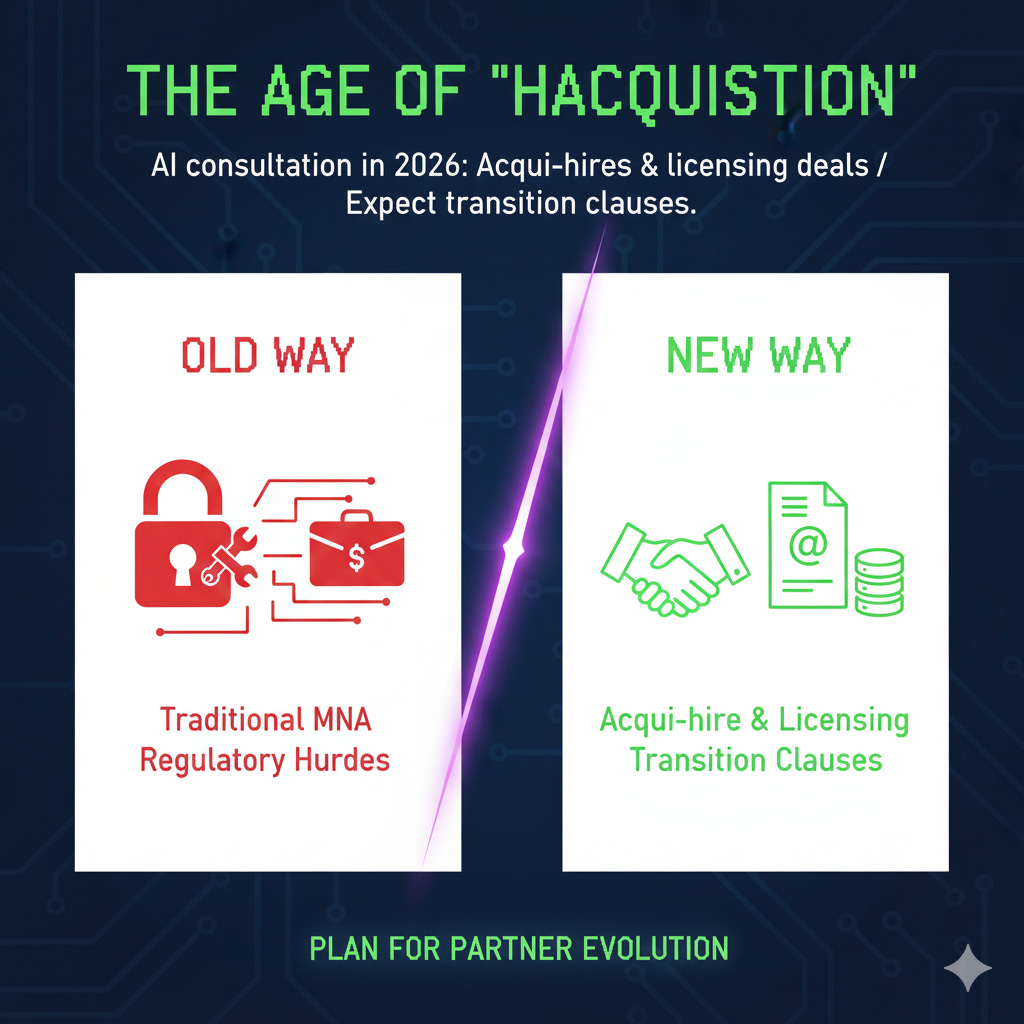

Prediction #1: The “Hackquisition” Becomes the Default M&A Playbook

NVIDIA’s $20B Groq deal and Meta’s $2B Manus acquisition both closed in days –structured as “licensing agreements” to dodge regulatory scrutiny. One analyst called it “keeping the fiction of competition alive.”

Expect this to become the norm. In 2026, major AI capability consolidation will happen through acqui-hires and licensing deals, not traditional acquisitions.

What this means for you: The startup you’re evaluating today could be absorbed by your hyperscaler next quarter. Build vendor contracts with transition clauses. Assume your AI partner’s independence has an expiration date

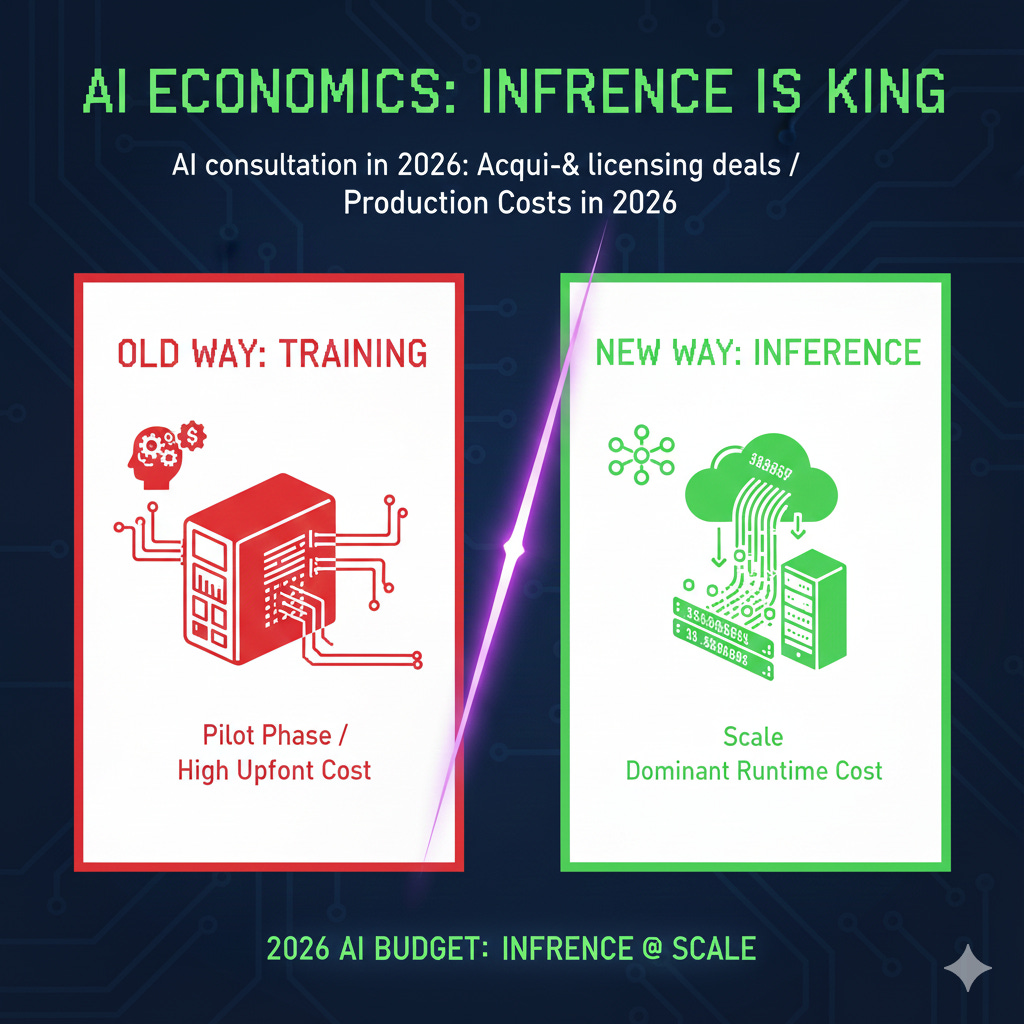

Prediction #2: Inference Costs Will Dominate Your AI Budget

NVIDIA paid $20B for Groq’s inference chip technology. Bank of America’s read: “implies recognition that while GPU dominated training, the shift toward inference could require specialized chips.” As AI moves from experimentation to production, inference –not training– becomes the cost center.

What this means for you: Your 2026 AI budget should model inference costs at scale, not just pilot-phase API calls. The economics of production AI look nothing like the economics of experimentation.

Prediction #3: The Browser Becomes the Default AI Interface

OpenAI Atlas, Microsoft Edge Copilot Mode, Google Gemini in Chrome, Perplexity Comet; all shipped in late 2025. Browser-native AI captures context across every tab, email, and document your employees touch. Most enterprise AI governance policies don’t address browsers.

What this means for you: The browser is becoming the operating system for knowledge work. If your AI governance doesn’t mention browsers, it’s already incomplete.

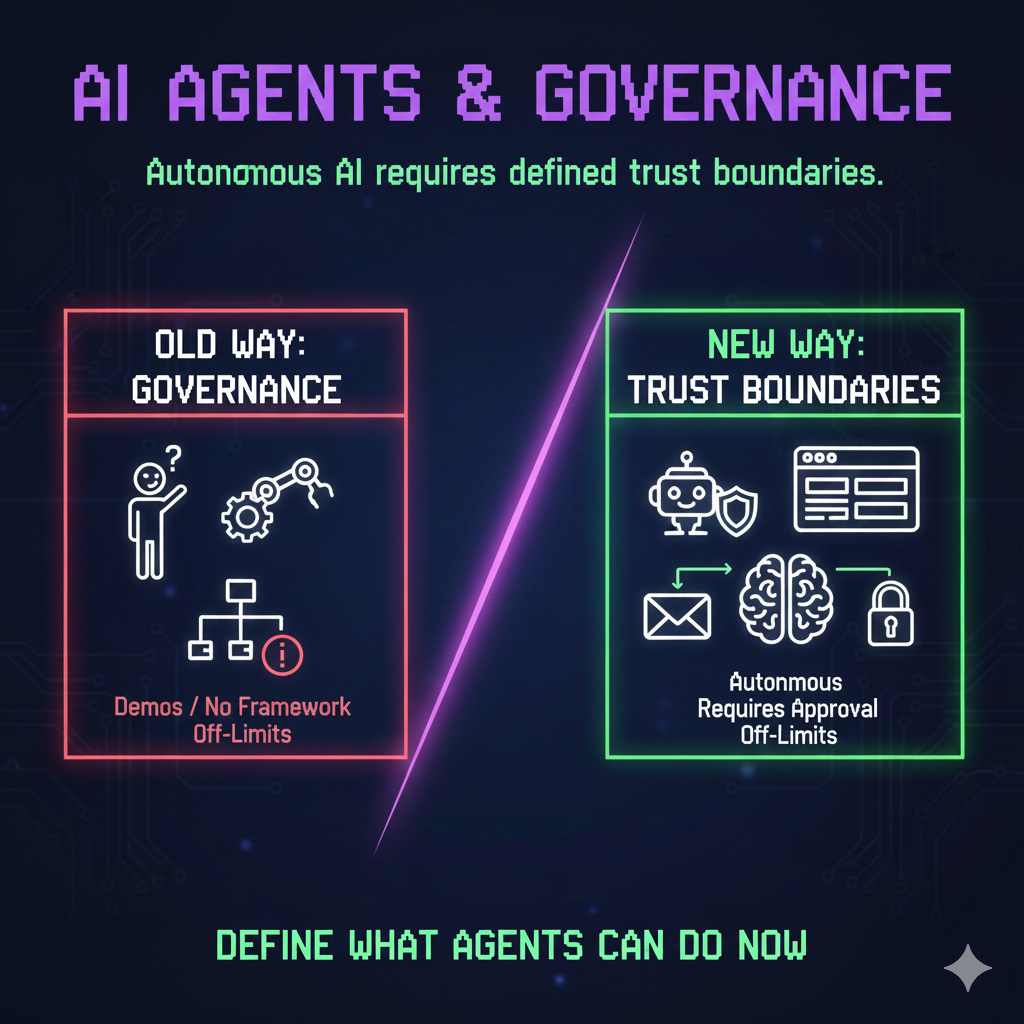

Prediction #4: Agentic AI Will Create a New Category of Enterprise Risk

Meta acquired Manus ($100M ARR in 8 months selling AI agents). Microsoft launched Agent 365. Google shipped agentic Gemini in Chrome. OpenAI acknowledged future AI models could become “offensive cyber weapons.” Agents are moving from demos to production, and most enterprises have no governance framework for autonomous AI taking actions on their behalf.

What this means for you: 2026 is the year to build an agent governance policy. Define trust boundaries: what can agents do autonomously, what requires human approval, and what’s off-limits entirely.

Noise Free Signal

Buy Rate Acceleration Benchmark: 76% of enterprise AI use cases purchased in 2025, up from 53% in 2024. Signal: The build vs. buy debate is over. Integration speed is the new differentiator.

Enterprise AI Spend Benchmark: $37 billion spent on generative AI in 2025 (3.2x YoY growth). Signal: This isn’t R&D anymore; it’s procurement. Budget accordingly.

Vendor Consolidation Velocity Benchmark: $22B+ in AI “hackquisitions” closed in December 2025 alone (NVIDIA-Groq, Meta-Manus). Signal: Your AI vendor’s independence has an expiration date. Build transition clauses into contracts.

Noise Free Intel (4 Worthy Links)

Menlo Ventures: 2025 State of Generative AI in the Enterprise – The definitive data on enterprise AI spend ($37B), buy vs. build (76% purchased), and where dollars are flowing. Required reading for 2026 planning.

CNBC: NVIDIA’s Groq Deal Structured to Keep “Fiction of Competition Alive” – Analyst breakdown of how the $20B “licensing agreement” dodges antitrust while consolidating inference chip capabilities. The M&A playbook for 2026.

TechCrunch: Meta Just Bought Manus – How a 10-day deal absorbed a startup doing $100M ARR in AI agents. Shows the speed at which agentic AI is being consolidated by the giants.

Accenture: Anthropic Partnership Announcement – 30,000 professionals trained on Claude, plus a Claude Center of Excellence. The clearest signal that “buy and integrate” is the new enterprise AI operating model.

Board-Ready Brief (1-Pager Summary)

You can download here (or click on the image below)

Nice article and predictions - as long as the security challenges are solved agentic browsers could be big winners in some enterprises already operating primarily browser based apps.

One thing I'd suggest - agentic coding tools will make integration a bit easier for some firms as they'll be able to more easily get into the poorly documented middleware and refactor APIs so they're rich enough and accurate enough for the AI era.